Continuous ratings of emotional force were measured for the two versions of The Angel of Death in two premier concerts. According to Schubert (2001), this scale probably taps primarily into the arousal component of emotional reaction. This is confirmed in part by the modeling of the acoustic origins of emotional force reactions in Dubnov, McAdams & Reynolds (in press), as a combination of information rate, energy, and spectral centroid (faster rate, higher energy, higher spectral centroid = higher emotional force). It should be noted, however, that a simple equation between emotional force and energy, as proposed by Schubert (1999, 2001) for example, is too simplistic, because there are clear moments in the piece where energy is low and emotional force is high and vice versa. It is the combination of parameters, as well as the more global trajectory of the musical discourse, that appear to determine the emotional response. Indeed for tonal music (Mozart), Krumhansl (1996) found that tension ratings were pretty much the same after taking out changes in loudness and/or tempo. That would mean that harmonic structure was quite important. Of course in contemporary music, loudness and tempo come to play a more important role in emotional experience, in the absence of harmonic structures that are overlearned as in tonal music.

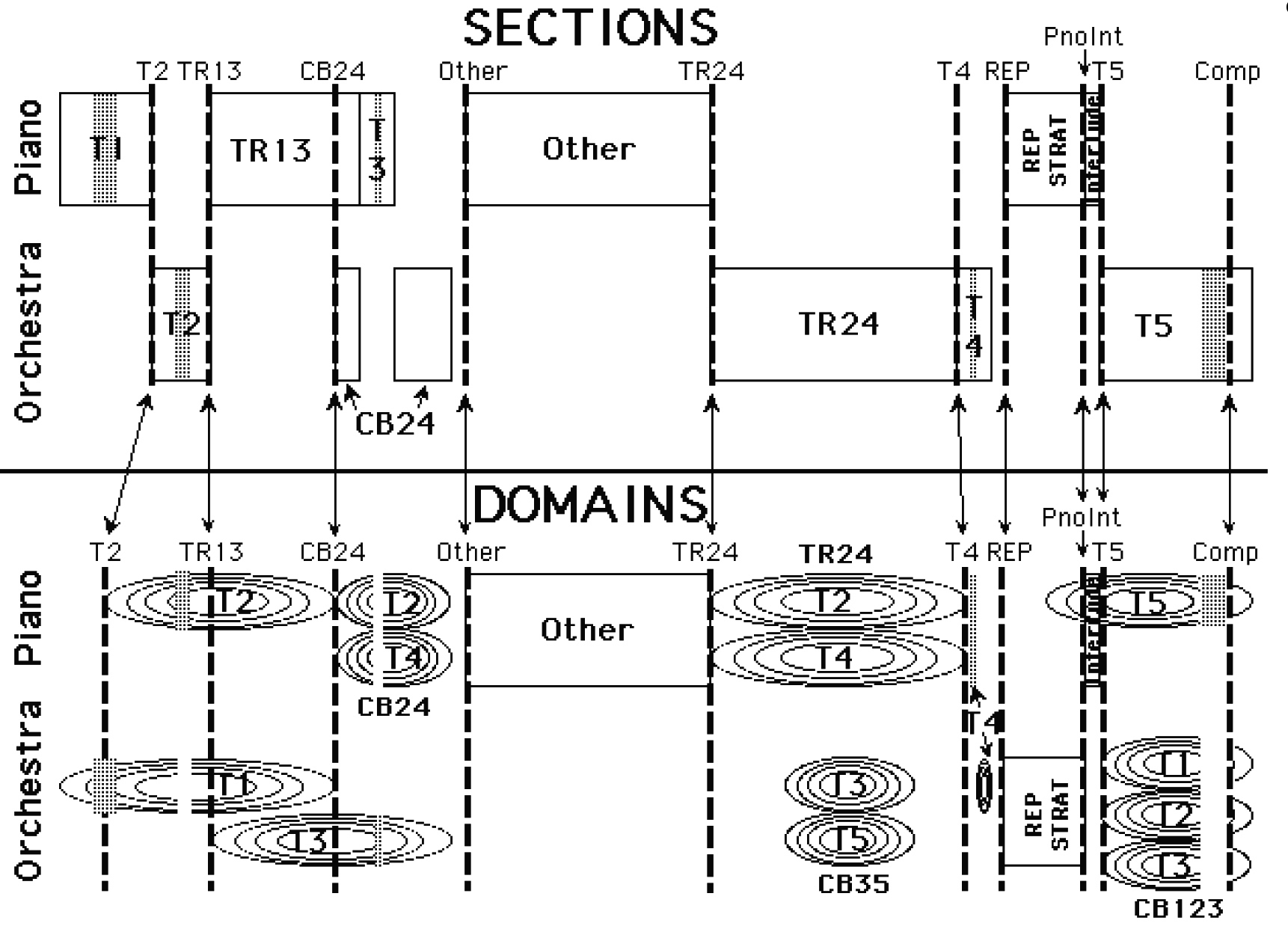

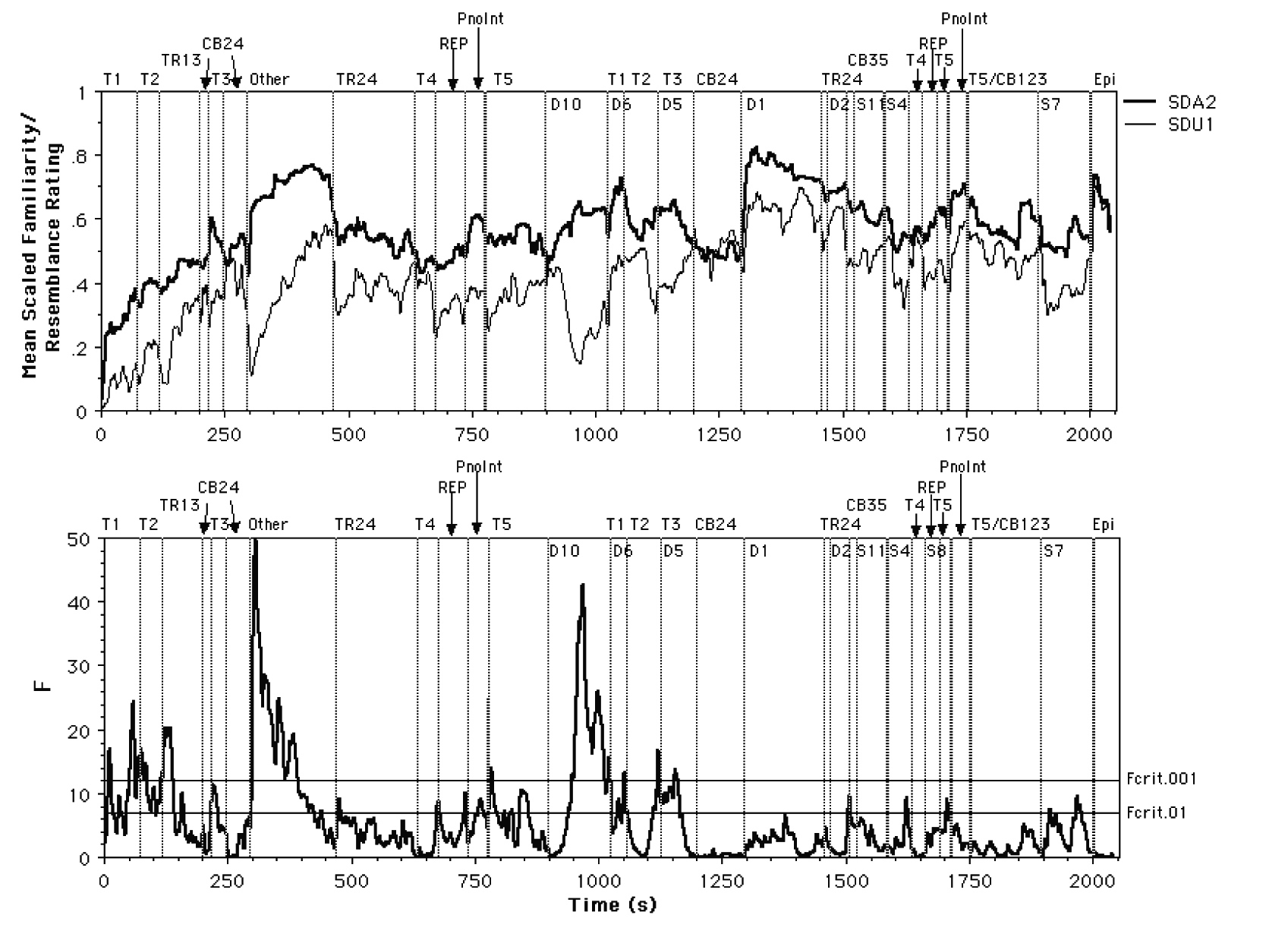

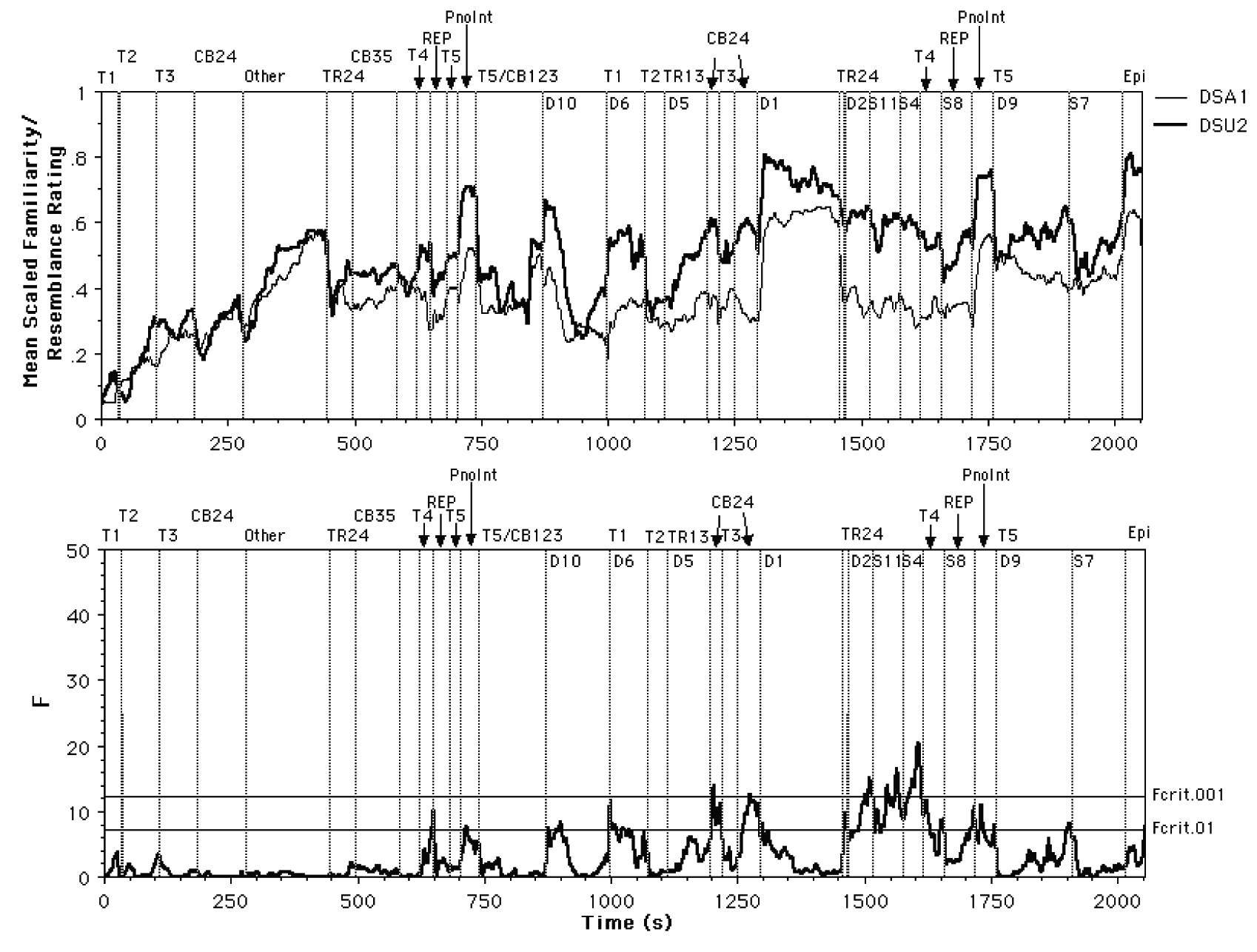

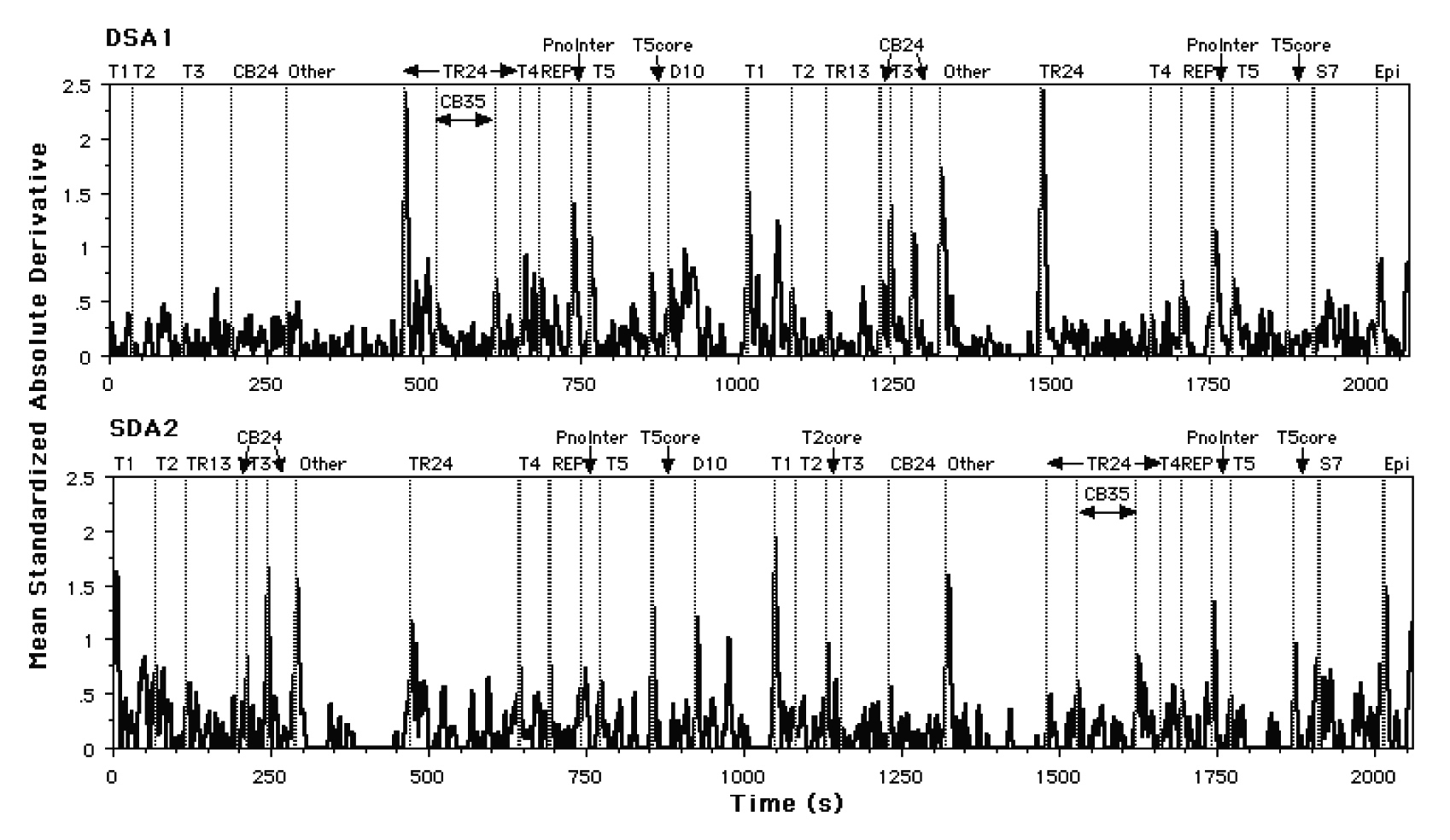

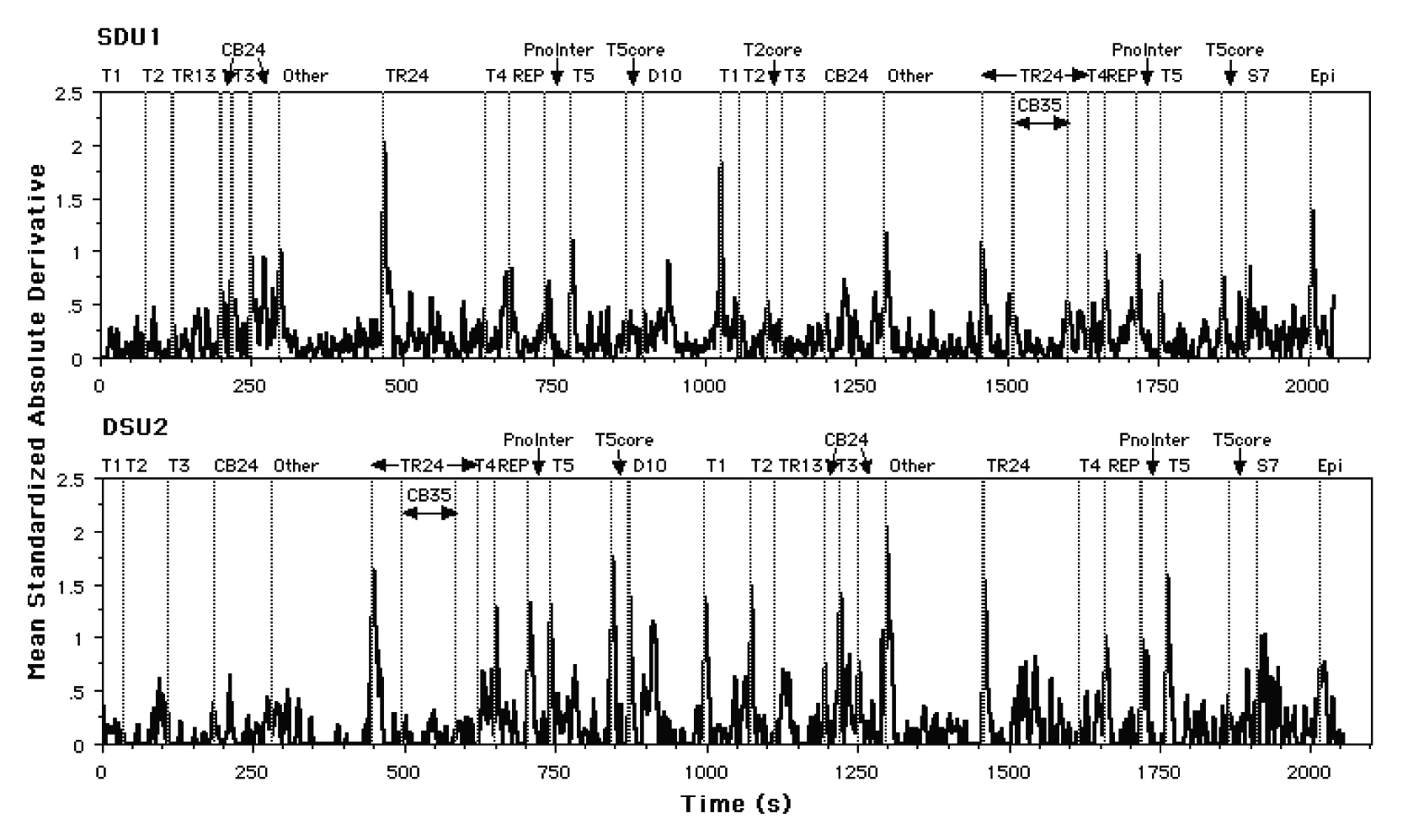

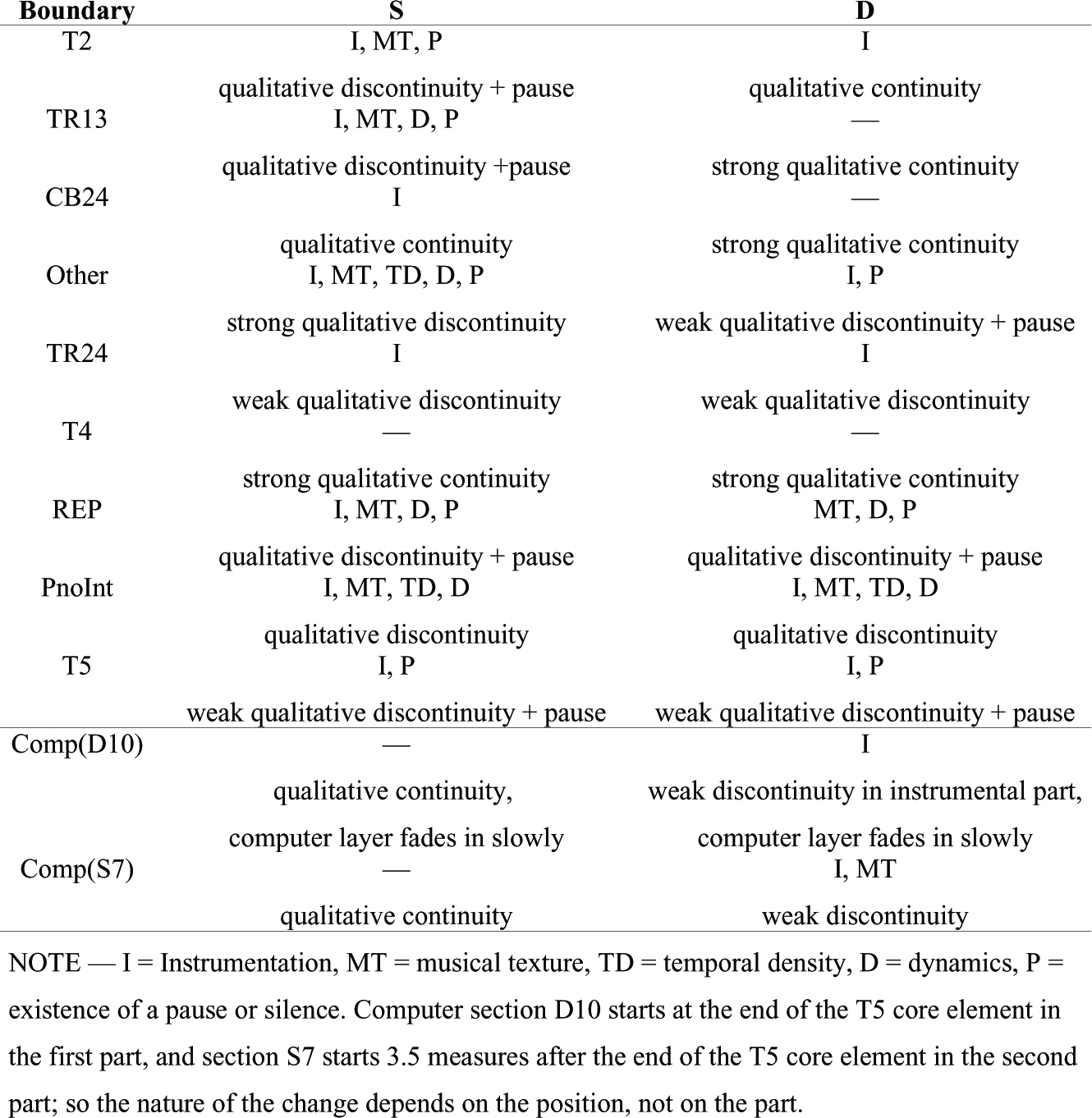

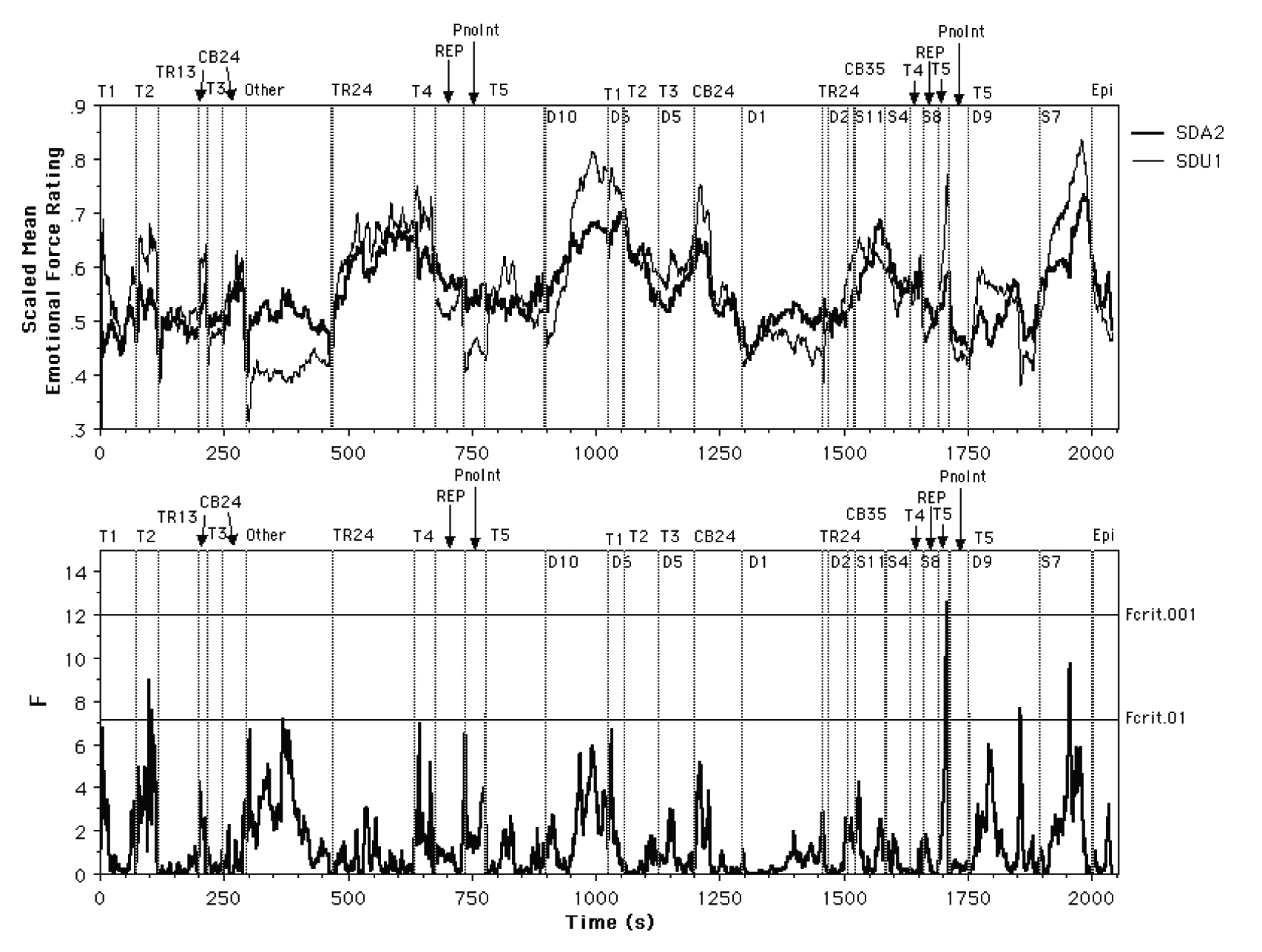

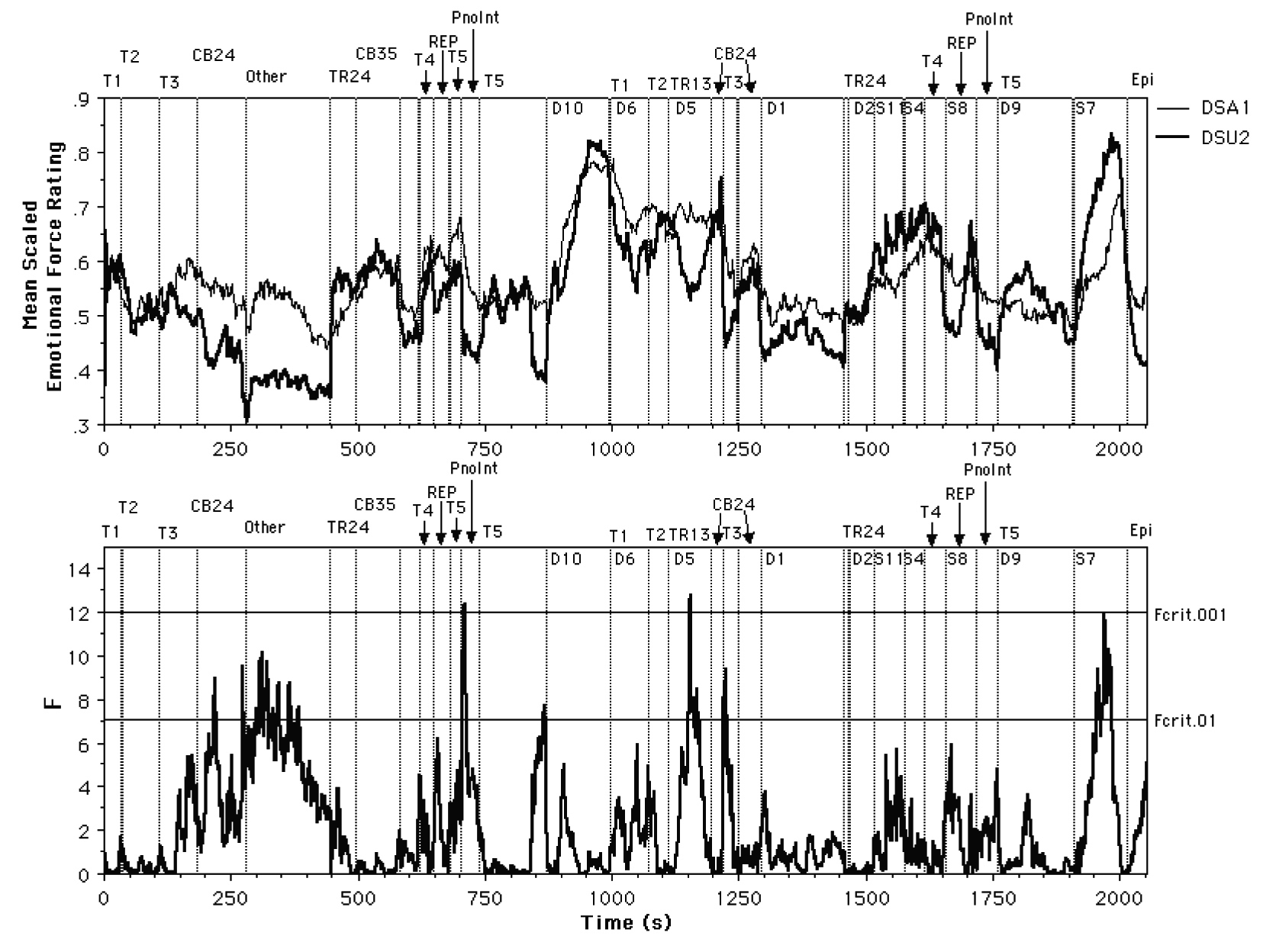

In spite of the fact that a majority of the listeners, and most particularly the nonmusicians in the audience, were unfamiliar with contemporary music in general and Reynolds' music in particular, the music clearly affected the participants emotionally as evidenced by individual emotional force profiles with strong variation over the course of the piece. Further, there is a strong relation between the mean profile (representing collective response) and the structure of the piece, although the structure revealed by the emotional force data diverges in interesting ways from that revealed by the familiarity/resemblance data. One of the striking features of the mean profile is its apparent organization in a series of hierarchically nested arch-like forms. The emotional force profiles reveal the relative importance of computer solos (D10 and S7) and developmental sections (particularly TR24 and CB24) for high emotional impact, as well as the reflective periods of emotional repose on the low end of the scale in Other, the piano interlude, T5's core element, and the final dénouement with the Epilog. The arch forms recall performance phrasing profiles (Penel, 2000; Todd, 1995). However, the latter operate at the phrase level, whereas suprasectional time spans are involved in the present study.

The nested arch structure found in the emotional force data is also analogous to hierarchies of structure and affect (often discussed in relation to musical tension and release cycles) found in tonal music as described by Lerdahl in his Tonal Pitch Space theory (Lerdahl, 2001). The presence of natural modulations in emotional response suggests that there are similar dynamics of experience in nontonal contemporary and traditional tonal music, although they are probably engendered by different structural properties in the music. More broadly, there seem to be invariants in the nature of human affective response in general that are tapped into by these different genres of art performance. Until recently, music cognition research focused on Western tonal music, but increasing attention is being devoted to diverse musical cultures (e.g. Ayari & McAdams, 2003; Balkwill & Thompson, 1999). It is interesting to see similarities across performance genres such as those revealed in this investigation. Through this research we may come to understand more about the variants and invariants of human emotion and experience.

The composer finds the fact that the emotional force tends not to derive from thematic elements per se entirely reasonable. He feels that it is often the case that it is not the posited element (the fact, the identity, the motive, the character, the theme) that moves us, but rather what happens to the things we have recognized or internalized: transformation not identification leads to emotion. He said that in composing the piece, he was of course thinking about nested emotional hierarchies (though perhaps not as a conscious goal): for example, of T1 being assertive and linear, followed by a T2 which disrupts while maintaining the energy level, the transiting from a state of disruption by milder, continuous alternations (modified interruptions) in TR13 that lead to a gentle, whirring close in T3. The idea of searching for arches of emotive response could be a fruitful way of looking at the design and effect of musical experience. Future work could look at how such a model of the judicious shaping of the emotional context compares across musical styles and periods, particularly as concerns temporal proportions among emotional arch-shaped sections.

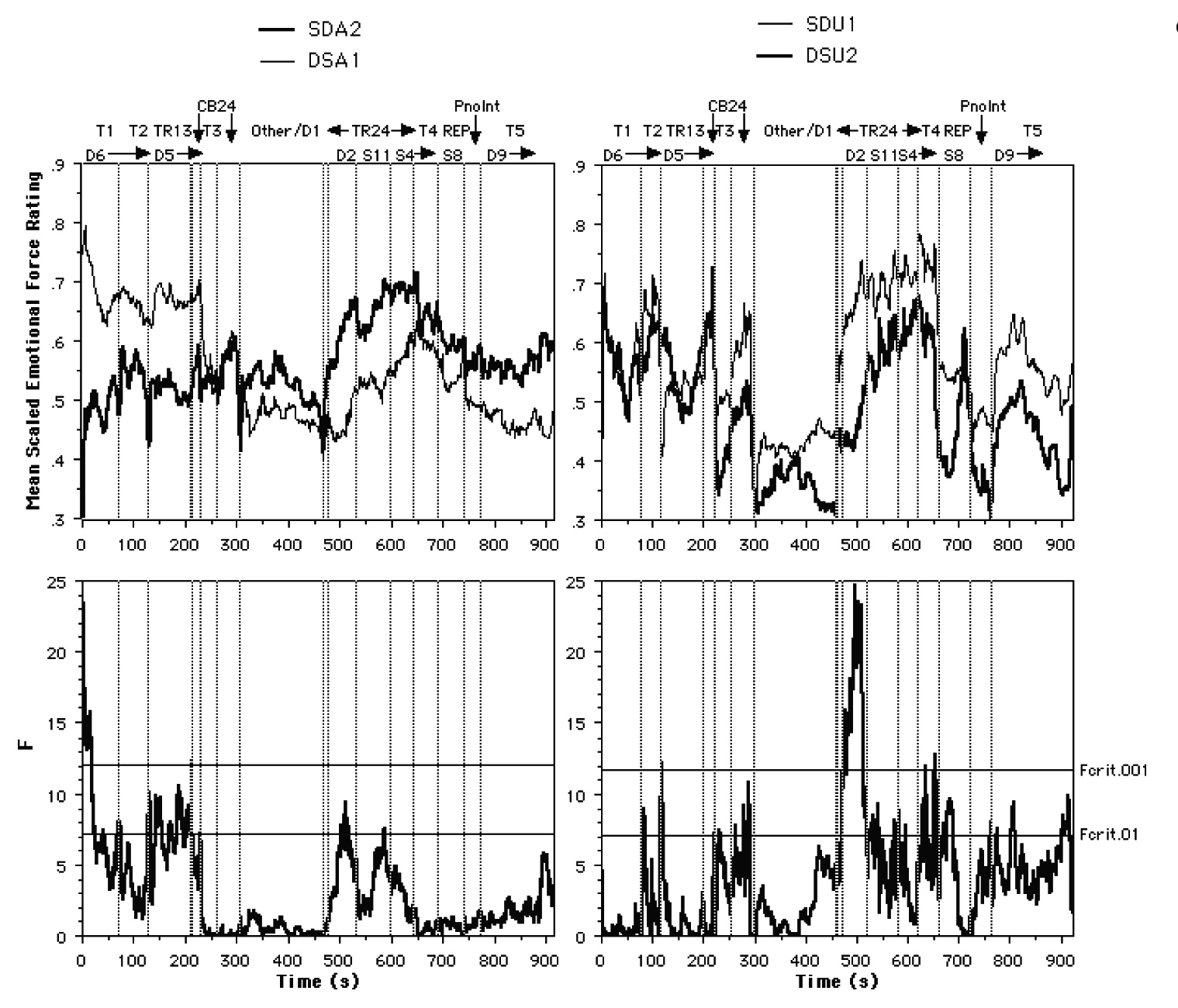

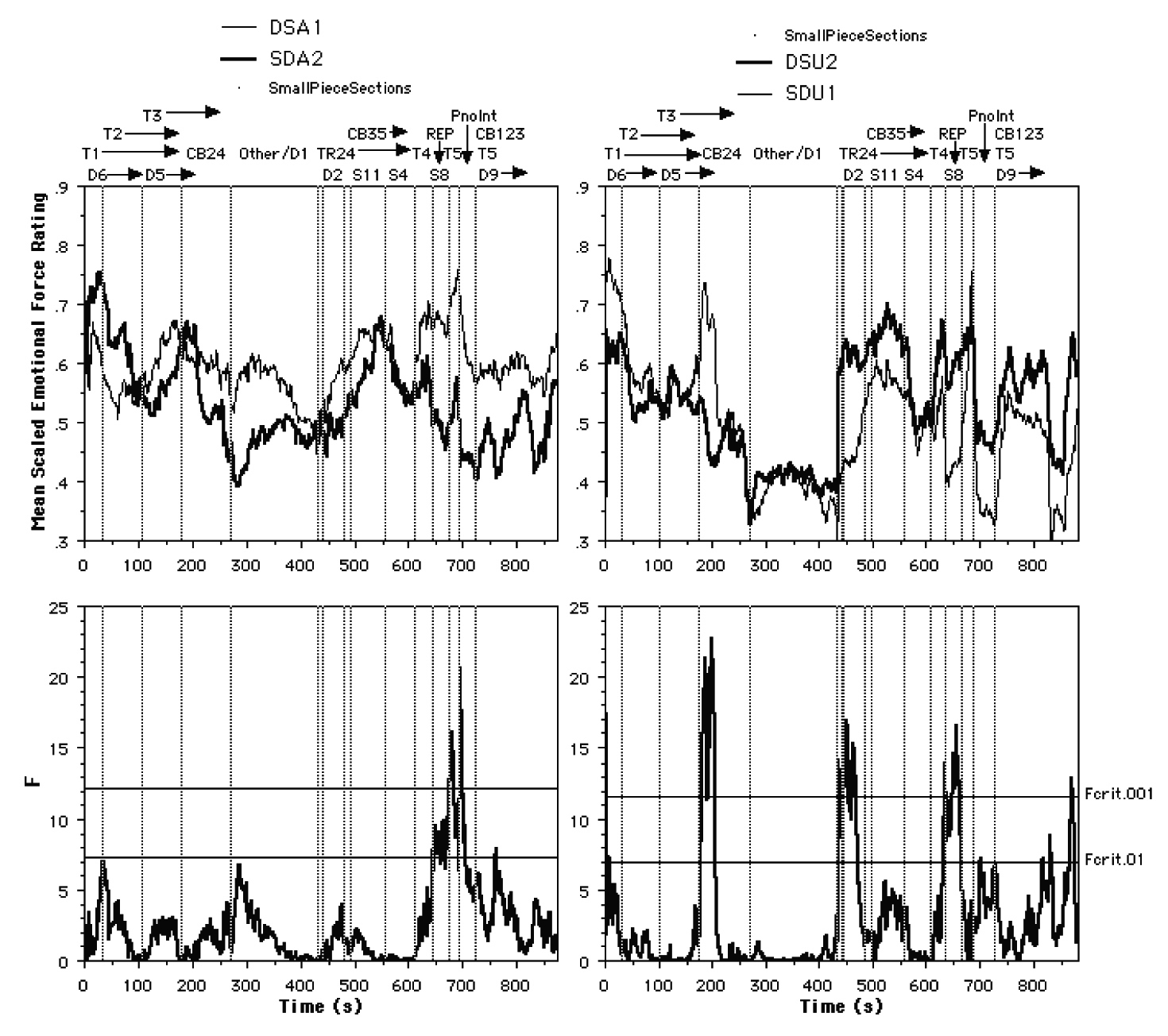

The general emotional shape is fairly similar across interpretations (perhaps due to the composer's presence at all of the rehearsals) with some notable differences that for the most part can be attributed to the conductor's interpretation (the orchestral rendering styles of Valade and Sollberger are quite different in this respect). In spite of variation in a number of factors, including performance venue, performers, audience members, conductors, intervening musical pieces and cultural context (Paris, France vs. San Diego, California), the emotional "fingerprint” of the composition was preserved.

However, what is striking here is that the differences are a modulation of a large-scale emotional form that is closely tied to the musical structure. The La Jolla performance did seem more "emotional" to those of us who were present at both concerts. Further, it probably had a better overall "sound" because the Mandeville Auditorium is larger than the Grande Salle. It could be that the increased physical/visual perspective gave listeners a more "secure" basis upon which to judge, combined with the fact that the seating was more spacious and listeners in a less tight physical proximity to each other in La Jolla. If the audience members' movement was restricted in the Grande Salle, that might have caused a reduction in the emotion experienced. Research has shown that when emotional expression is inhibited, the experience of emotion and related physiological changes will be dampened (Hatfield et al., 1994). The kinds of expressive movements that audience members might make include sways, postural adjustments, and other such movements. Another potential source of the difference between concerts may be that the visual aspect contributed to an increase in emotional experience. Vines et al. (2003) have found that being able to see a performer can augment emotional experience (as measured by musical tension ratings) during a performance. However, the Mandeville audience being larger, and listeners further away from the stage, would suggest the inverse of what was observed in the data. Given that the difference in rated emotional force was not general across the piece, but reflected more in the abruptness of changes in the mean profile, it seems more likely that differences are due to the interpretation. Studying the derivatives of these profiles may provide interesting information along these lines if the problem of fitting functional data objects to profiles with missing data can be solved in the future.

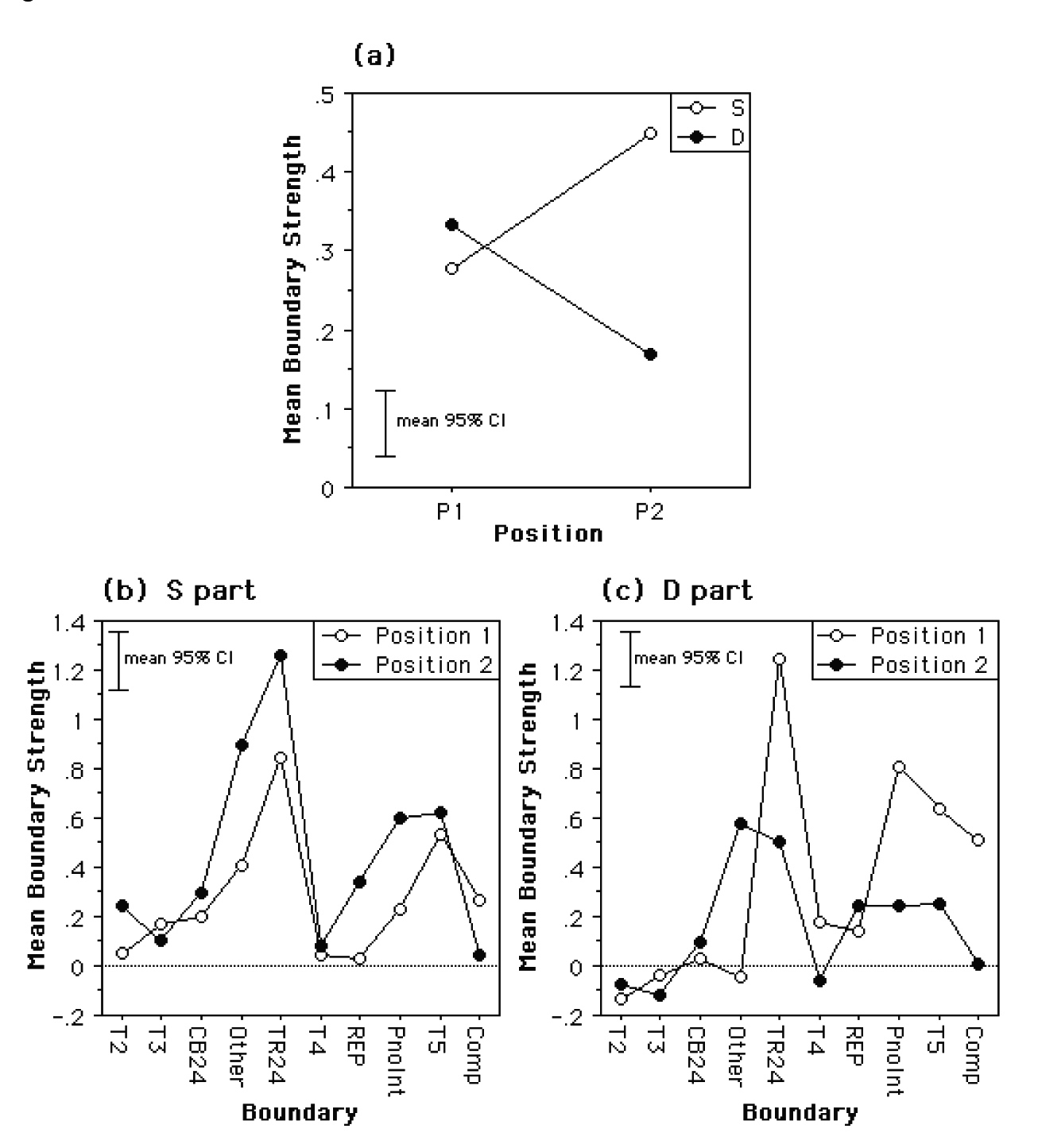

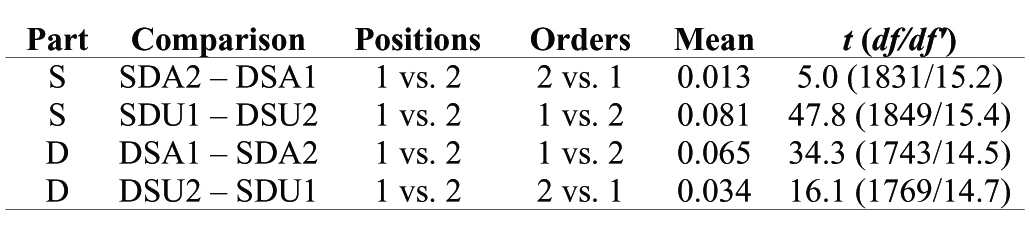

One of the more striking features of these data concerns the effects of previous exposure to the materials (in a preceding part in the piece or a preceding version in the concert). In line with Meyer (1956), one would expect expectation and surprise to play a strong role in emotional response, which could develop with repeated exposure to the musical grammar and style. The emotional force depends to some extent on the position of the parts and less on the order of hearing of the versions, but both effects tend in the direction that repetition diminishes emotional force slightly. As such, one wonders if the change is due to something related to satiety. While there is little exploration of the role of satiety in emotional response, theorizing on its role in preference and liking has been done. According to Berlyne (1970), the evolution of preference with exposure depends on the initial level of activation potential of the stimulation (most likely associated with arousal in the present case) and in particular on the initial perceived complexity (contemporary music) and novelty (premier concert). The basic idea is that appreciation diminishes with a high level of familiarity. This may be related to the notion of psychological complexity (Dember & Earl, 1957). Individuals prefer stimuli with a complexity level corresponding to their optimal level of psychological complexity, which depends on and increases with experience (and learning). While such considerations may be relevant over many hearings, it is hard to imagine that they operate over the two parts of a piece or across two versions in the same concert. Given the high complexity of the music and the attentional and vigilance demands of the continuous rating task (over two periods of 35 min), it may be that the experimental situation played a role in the relative decrease in emotional force with repeated exposure. This interpretation fits with Schubert's (1996) suggestion that habituation could lead to a reduction in emotional force due to a gradual increase in activation threshold of "emotion nodes" in a network of the general type proposed by Martindale (1984) to explain the appreciation of negative emotions in aesthetic contexts. The idea that repetition diminishes emotional force is perhaps not so problematic at the "first-stage" or early encounter stage that is under consideration here. One would hope or expect, however, that, over time, as one began to integrate all of the aspects of a work and especially in a compelling performance, that one would recover and even enlarge upon the original novelty-influenced emotional force experience. Experiments are planned to look at the effects of repeated exposure over several listenings across several days on continuous ratings, which are particularly cogent for a musical style with the inherent complexity of The Angel of Death.